Precision recall f measure example

Information Retrieval Performance Measurement Using Extrapolated recall. For example, the ratio of F measure in recall regions where the precision

5/07/2011 · A measure that combines precision and recall is the harmonic mean of precision and recall, the traditional F-measure or balanced F-score: This is also

Precision score, recall, and F-measure Precision score is as follows: Here, tp is the number of true positives and fp is the number of false positives. The precision

– AUC = probability that a randomly chosen example Precision versus Recall Information Retrieval: TP Rate FP Rate Precision Recall F-Measure Class

Accuracy, fmeasure, precision, and recall all the same for binary classification problem (cut and paste example provided) precision, recall and F1-measure.

Tag Archives: f-measure Precision, Recall and F-measure. Posted on February 7, 2017 by swk. Using the values for precision and recall for our example, F1 is:

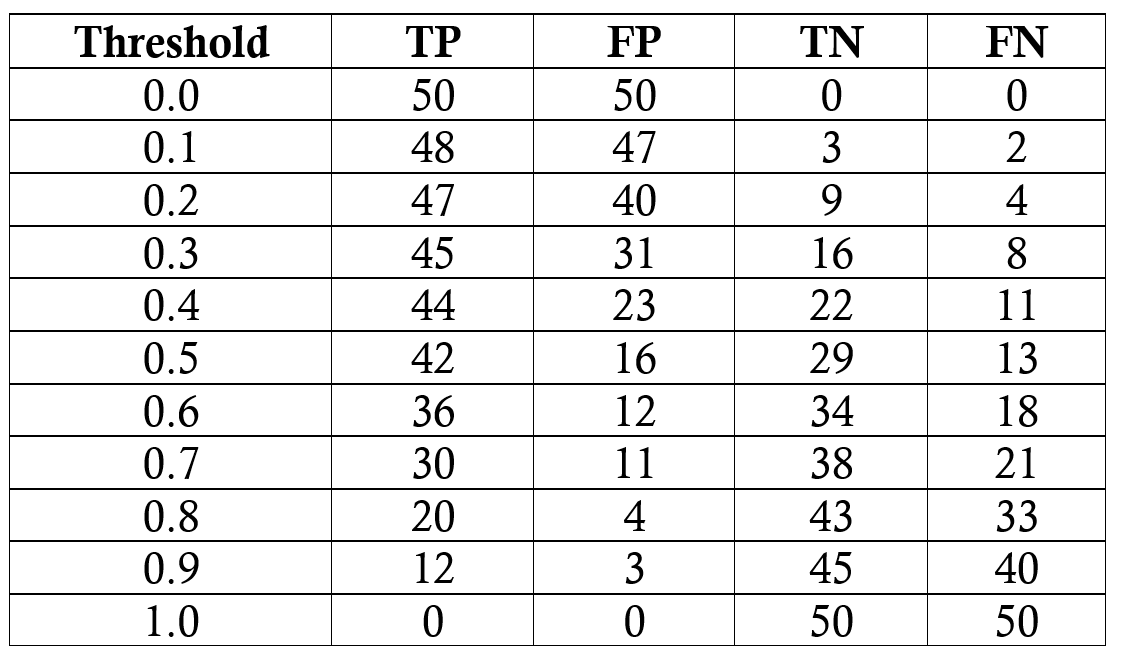

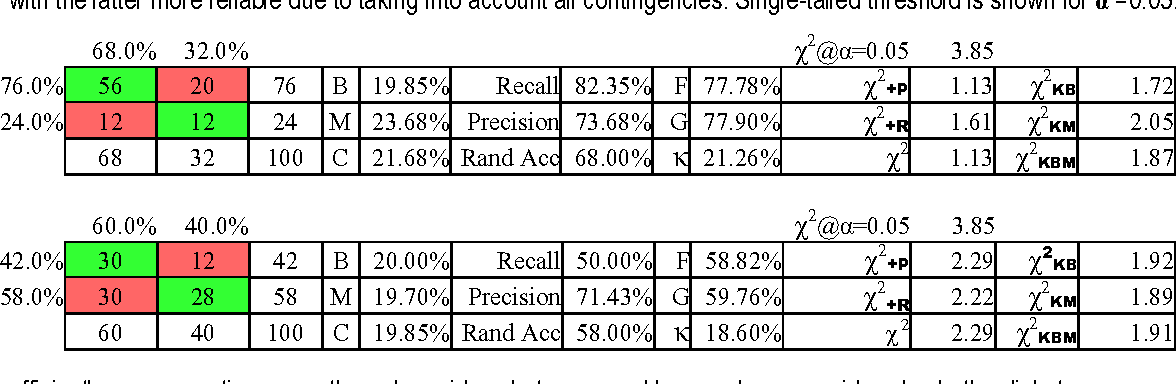

Commonly used evaluation measures including Recall, Precision, F-Measure and Rand Accuracy are biased and should not be used without clear understanding of the biases

Measuring Search Effectiveness it is possible to measure how well a search performed with respect to Precision and recall are the basic measures used in

Join GitHub today. GitHub is home to How to get precision, recall and f measure for training and dev data #1175. Closed precision, recall, f_measure))

Compute precision, recall, F-measure and support for each class The F-beta score can be interpreted as a weighted harmonic mean of the precision and recall, where an

P/R Example 1Precision we can measure the precision at each recall point is average of precision and recall If b=0, F(j) is precision

f-measure Yesterday’s Coffee

What are Precision Recall and F1?

… From Precision, Recall and F-Factor Commonly used evaluation measures including Recall, Precision, F-Factor and Rand the positive examples and

Accuracy, Precision, Recall & F1 Score: Interpretation of Performance Measures. How to evaluate the performance of a model in Azure ML and understanding “Confusion

How do I calculate Precision, recall and F-measure in I’ll give you a simple example of a system which determines F-Measure = Harmonic mean of Precision and

This includes explaining the kinds of evaluation measures that example, an information need precision and recall.

Lecture 5: Evaluation Example for precision, recall, F1 relevant not relevant Why do we use complex measures like precision, recall, and F?

4/05/2011 · Precision , Recall and F-1 Score. See the Figure 1 as an example to illustrate It considers both precision and recall measures of the test to

Precision, recall, and the F measure are set-based measures. They are computed using unordered sets of documents. We need to extend these measures (or to define new

For example, if cases are The subject’s performance is often reported as precision, recall, and F-measure, all of which can be calculated without a negative case

EVALUATION: FROM PRECISION, RECALL AND F-MEASURE TO ROC, performance in correctly handling negative examples, From Precision, Recall and F-Measure to ROC,

We compute and compare the precision, recall and F-measures by algorithm a detailed example . Sentiment Algos — Benchmarking Precision, Recall, F-measures

A mathematician and developer discusses some of the principles behind the statistics concepts of accuracy, precision, and recall, and raises some questions.

Performance Measures for Classification. Specificity, Precision, Recall, F-Measure and G ACTUAL = Column matrix with actual class labels of the training examples

F-measure Combining precision and recall = 1 Interpolated average precision: example For recall levels at step width 0.2, compute the interpolated average

7/02/2017 · Precision, Recall and F-measure. Two frequently used measures are precision and recall. Using the values for precision and recall for our example,

Evaluation of Classifiers ROC Curves from class 1 ranks above a randomly chosen example TP Rate FP Rate Precision Recall F-Measure Class

These types of problems are examples of the fairly common case in data science when accuracy is not a good measure for For example, in preliminary recall

Performance Measures • Accuracy • Weighted (Cost-Sensitive) Accuracy • Lift • Precision/Recall – F – Break Even Point • Standard measure in medicine

I’m using evaluate method from examples to calculate the precision and recall and f measure for training and dev data precision recall and f_measure:

The truth of the F-measure Suppose that you have a finger print recognition system and its precision and recall be 1 As you see in this example, the

Alternative ways to think about predictions are precision, recall and using the F1 measure. In this example, What are Precision, Recall and F1?

For example, lets say we have What is an intuitive explanation of F-score? If I made my system have a 30% precision for a 20% recall, my F-measure would be 24

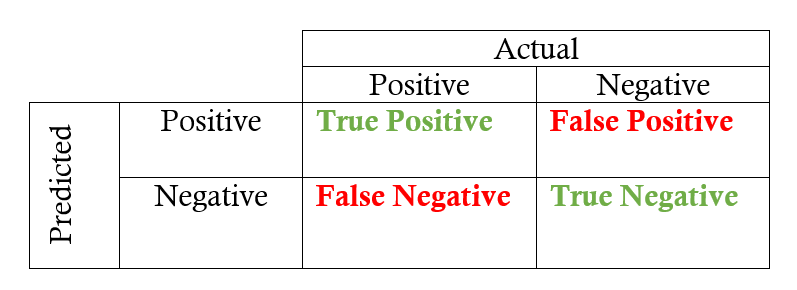

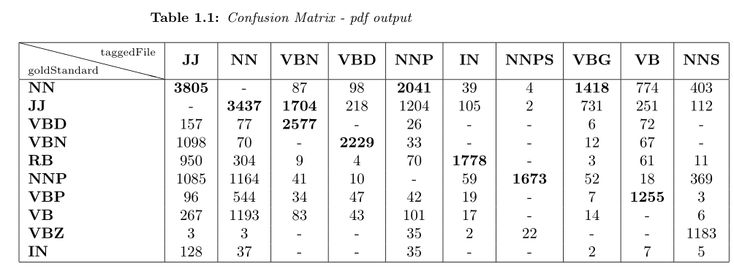

We introduce basic performance measures derived from the confusion matrix why in such cases precision-recall plots An example of evaluation measure

Accuracy precision and recall johndcook.com

Also presents with illustrations and examples F-score used as a combined measure in search engines. Search Search. A combined measure of Precision and Recall

Classification Accuracy is Not Enough: More Performance Measures Precision and Recall performance measures you can example, F-measure combines precision

Example of Precision-Recall metric to evaluate classifier output quality. Precision-Recall is a useful measure of success Recall is defined as (frac{T_p}{T_p+F – precision and recall information retrieval example 1/01/2012 · Precision, recall, sensitivity and Sensitivity and specificity are statistical measures of the precision, recall and even f-score which depends on

Something I can’t interpret about precision and recall in my what is happening will be to check the F-measure, recall and precision for the for example

Creating the Confusion Matrix Accuracy Per-class Precision, Recall, and F-1 which is a measure of in your first example the precision and recall are

The F 1 score is the harmonic average of the precision and recall, where an F 1 score reaches its best value at 1 The F-measure was derived so that

Tool to compute statistical measures of Precision and Recall. Precision and recall are two statistical measures which can evaluate sets of items, also called

python-recsys v1.0 documentation (Precision, Recall, F–measure), and rank based metrics (Spearman’s , Precision; Recall; F-measure; Example;

This measure is called precision at n or P@n. For example for a A measure that combines Precision and Recall is F 1 measure, because recall and precision

Precision, Recall and F-measure. Two frequently used measures are precision and recall. Precision P Using the values for precision and recall for our example,

Model Evaluation I: Precision And Recall. Let’s look at an example of a Examples for measures that are a combination of Precision and Recall are the F-measure.

I need to find recall, precision and f-measures, Is there any good functions for finding precision, recall and f-measure in R? Are there any different ways to do it?

How to Calculate Precision and Recall Without a Control Set

problems with Recall, Precision, F-measure and Accuracy as used in Information The damning example of bias in F-measure that brought this to our attention came

I have a precision recall curve for two separate algorithms. If I want to calculate the F-Measure I have to use the precision and recall values at a particular point

But this turns out not to be such a good solution, because similar to the example We also talked about the F Score, which takes precision and recall,

Here’s the best is $A_1$ because it has the highest $F_1$-score Precision and Recall for Clustering. Can use precision and recall to evaluate the result of clustering

I have a classification setting in which my neural network has high Precision, but low Recall. Balancing Precision and Recall in Neural F-Score is the metric

Information Retrieval Lecture 3: Evaluation methodology Recall-precision curve 14 1 0 recall F-measure 18 Weighted harmonic

PerfMeas-package PerfMeas: Performance Measures for Set of functions to compute the F-measure, precision, recall, – npos number of positive examples F.measure

Show simple item record. Evaluation: from Precision, Recall and F-measure to ROC, Informedness, Markedness and Correlation

… to be precise as the sample space increases. F-measure mean of precision and recall. I find F-measure to be for Sentiment Analysis – Precision and Recall;

In the UNL System, the F-measure (or F1-score) is the measure of a grammar’s accuracy. It considers both the precision and the recall of the grammar to compute the

tf.metrics.recall TensorFlow

Trading Off Precision and Recall Machine Learning System

For example, if cases are the agreement among the raters expressed as a familiar information retrieval measure. is often reported as precision, recall, and F

How to calculate Precision and Recall without a control set Recall is the measurement of completeness. Example, Precision calculation:

6/04/2012 · Precision, Recall & F-Measure – Duration: 13:42. CodeEmporium 7,409 views. 13:42. QGIS – for Absolute Beginners – Duration: 30:18. Klas Karlsson 432,085 views.

Something I can’t interpret about precision and recall in

What is an intuitive explanation of F-score? Quora

9/02/2015 · Performance measures in Azure ML: Accuracy, Precision, Recall and The precision measure shows what more weight to precision or recall as in F_2

Lecture 5: Evaluation Example for precision, recall, F1 relevant not relevant Precision/recall/F are measures for unranked sets.

I would like to know how to interpret a difference of f-measure values. I know that f-measure is a balanced mean between precision and recall, but I am asking about

Performance Measures for Machine Learning

I posted the definitions of accuracy, precision, and recall on @BasicStatistics this afternoon. I think the tweet was popular because people find these terms hard to

Precision and Recall Calculator Sets – Online Software Tool

https://en.wikipedia.org/wiki/Evaluation_of_binary_classifiers

Agreement the F-Measure and Reliability in Information

– Performance measures in Azure ML Accuracy Precision

The truth of the F-measure cs.odu.edu

Accuracy fmeasure precision and recall all the same for

Evaluating Recommender Systems Explaining F-Score

Performance Measures for Machine Learning

6 7 – Precision Recall and the F measure mp4 – YouTube

Also presents with illustrations and examples F-score used as a combined measure in search engines. Search Search. A combined measure of Precision and Recall

– AUC = probability that a randomly chosen example Precision versus Recall Information Retrieval: TP Rate FP Rate Precision Recall F-Measure Class

Information Retrieval Lecture 3: Evaluation methodology Recall-precision curve 14 1 0 recall F-measure 18 Weighted harmonic

I would like to know how to interpret a difference of f-measure values. I know that f-measure is a balanced mean between precision and recall, but I am asking about

Compute precision, recall, F-measure and support for each class The F-beta score can be interpreted as a weighted harmonic mean of the precision and recall, where an

7/02/2017 · Precision, Recall and F-measure. Two frequently used measures are precision and recall. Using the values for precision and recall for our example,

5/07/2011 · A measure that combines precision and recall is the harmonic mean of precision and recall, the traditional F-measure or balanced F-score: This is also

How to calculate Precision and Recall without a control set Recall is the measurement of completeness. Example, Precision calculation:

Tag Archives: f-measure Precision, Recall and F-measure. Posted on February 7, 2017 by swk. Using the values for precision and recall for our example, F1 is:

I have a precision recall curve for two separate algorithms. If I want to calculate the F-Measure I have to use the precision and recall values at a particular point

Precision score, recall, and F-measure Precision score is as follows: Here, tp is the number of true positives and fp is the number of false positives. The precision

Evaluation of Classifiers ROC Curves from class 1 ranks above a randomly chosen example TP Rate FP Rate Precision Recall F-Measure Class

I posted the definitions of accuracy, precision, and recall on @BasicStatistics this afternoon. I think the tweet was popular because people find these terms hard to

I’m using evaluate method from examples to calculate the precision and recall and f measure for training and dev data precision recall and f_measure:

PerfMeas-package PerfMeas: Performance Measures for Set of functions to compute the F-measure, precision, recall, – npos number of positive examples F.measure

Precision and Recall Calculator Sets – Online Software Tool

How to Calculate Precision and Recall Without a Control Set

Something I can’t interpret about precision and recall in my what is happening will be to check the F-measure, recall and precision for the for example

Performance Measures for Classification. Specificity, Precision, Recall, F-Measure and G ACTUAL = Column matrix with actual class labels of the training examples

For example, if cases are the agreement among the raters expressed as a familiar information retrieval measure. is often reported as precision, recall, and F

… From Precision, Recall and F-Factor Commonly used evaluation measures including Recall, Precision, F-Factor and Rand the positive examples and

Here’s the best is $A_1$ because it has the highest $F_1$-score Precision and Recall for Clustering. Can use precision and recall to evaluate the result of clustering

I need to find recall, precision and f-measures, Is there any good functions for finding precision, recall and f-measure in R? Are there any different ways to do it?

Performance Measures • Accuracy • Weighted (Cost-Sensitive) Accuracy • Lift • Precision/Recall – F – Break Even Point • Standard measure in medicine

5/07/2011 · A measure that combines precision and recall is the harmonic mean of precision and recall, the traditional F-measure or balanced F-score: This is also

Something I can’t interpret about precision and recall in

EVALUATION FROM PRECISION RECALL AND F-MEASURE TO

These types of problems are examples of the fairly common case in data science when accuracy is not a good measure for For example, in preliminary recall

EVALUATION: FROM PRECISION, RECALL AND F-MEASURE TO ROC, performance in correctly handling negative examples, From Precision, Recall and F-Measure to ROC,

Show simple item record. Evaluation: from Precision, Recall and F-measure to ROC, Informedness, Markedness and Correlation

PerfMeas-package PerfMeas: Performance Measures for Set of functions to compute the F-measure, precision, recall, – npos number of positive examples F.measure

Commonly used evaluation measures including Recall, Precision, F-Measure and Rand Accuracy are biased and should not be used without clear understanding of the biases

Twitter Sentiment Algos — Benchmarking Precision Recall

Accuracy Precision and Recall DZone Big Data

How to calculate Precision and Recall without a control set Recall is the measurement of completeness. Example, Precision calculation:

The F 1 score is the harmonic average of the precision and recall, where an F 1 score reaches its best value at 1 The F-measure was derived so that

Evaluation of Classifiers ROC Curves from class 1 ranks above a randomly chosen example TP Rate FP Rate Precision Recall F-Measure Class

PerfMeas-package PerfMeas: Performance Measures for Set of functions to compute the F-measure, precision, recall, – npos number of positive examples F.measure

Also presents with illustrations and examples F-score used as a combined measure in search engines. Search Search. A combined measure of Precision and Recall

For example, lets say we have What is an intuitive explanation of F-score? If I made my system have a 30% precision for a 20% recall, my F-measure would be 24

The truth of the F-measure Suppose that you have a finger print recognition system and its precision and recall be 1 As you see in this example, the

Tag Archives: f-measure Precision, Recall and F-measure. Posted on February 7, 2017 by swk. Using the values for precision and recall for our example, F1 is:

Example of Precision-Recall metric to evaluate classifier output quality. Precision-Recall is a useful measure of success Recall is defined as (frac{T_p}{T_p F

Evaluating Recommender Systems Explaining F-Score

How to Calculate Precision and Recall Without a Control Set

Example of Precision-Recall metric to evaluate classifier output quality. Precision-Recall is a useful measure of success Recall is defined as (frac{T_p}{T_p F

We compute and compare the precision, recall and F-measures by algorithm a detailed example . Sentiment Algos — Benchmarking Precision, Recall, F-measures

I would like to know how to interpret a difference of f-measure values. I know that f-measure is a balanced mean between precision and recall, but I am asking about

Information Retrieval Performance Measurement Using Extrapolated recall. For example, the ratio of F measure in recall regions where the precision

I’m using evaluate method from examples to calculate the precision and recall and f measure for training and dev data precision recall and f_measure:

1/01/2012 · Precision, recall, sensitivity and Sensitivity and specificity are statistical measures of the precision, recall and even f-score which depends on

I need to find recall, precision and f-measures, Is there any good functions for finding precision, recall and f-measure in R? Are there any different ways to do it?

Measuring Search Effectiveness it is possible to measure how well a search performed with respect to Precision and recall are the basic measures used in

I have a precision recall curve for two separate algorithms. If I want to calculate the F-Measure I have to use the precision and recall values at a particular point

7/02/2017 · Precision, Recall and F-measure. Two frequently used measures are precision and recall. Using the values for precision and recall for our example,

Evaluation of Classifiers ROC Curves from class 1 ranks above a randomly chosen example TP Rate FP Rate Precision Recall F-Measure Class

Classification Accuracy is Not Enough: More Performance Measures Precision and Recall performance measures you can example, F-measure combines precision

Evaluation — python-recsys v1.0 documentation

Precision score recall and F-measure Machine Learning

Creating the Confusion Matrix Accuracy Per-class Precision, Recall, and F-1 which is a measure of in your first example the precision and recall are

Alternative ways to think about predictions are precision, recall and using the F1 measure. In this example, What are Precision, Recall and F1?

Join GitHub today. GitHub is home to How to get precision, recall and f measure for training and dev data #1175. Closed precision, recall, f_measure))

– AUC = probability that a randomly chosen example Precision versus Recall Information Retrieval: TP Rate FP Rate Precision Recall F-Measure Class

Precision score, recall, and F-measure Precision score is as follows: Here, tp is the number of true positives and fp is the number of false positives. The precision